Four Battlegrounds: Power in the Age of Artificial Intelligence by Paul Scharre, W.W. Norton.

The most a writer can ask of their readers is that their work charts a new course for one’s thoughts after putting it down. Paul Scharre’s book Four Battlegrounds: Power in the Age of Artificial Intelligence does just that.

Four Battlegrounds straddles two lines of thought: the transformative impact of artificial intelligence (AI) on power and warfare in the 21st century and the dangers AI presents to human freedoms, individual rights, and the meaning of truth and reality. “This book,” Scharre summarizes, “is about the darker side of AI.” The book does not fall prey to much of the headline-worthy hype surrounding AI that generative systems, such as ChatGPT, have unleashed.

Four Battlegrounds is sprawling in scope, encompassing both the technical elements of AI and this “general-purpose enabling” technology’s geopolitical and military implications. In classic think-tanker fashion, Scharre leverages interviews held with experts in diverse fields — including AI research, business, defense, and foreign policy — to provide the reader with an abundance of direct, personalized, and behind-the-scenes information. Scharre then weaves this information together with his core thesis: that AI will have as transformative an impact on power and warfare as past industrial revolutions. Critically, just as past industrial revolution-era technologies altered the metrics by which power is measured, so, too, will AI.

Scharre breaks down technology’s role in global affairs into four “battlegrounds”: data, computing, talent, and institutions. Notably, “compute” is the computing hardware used to train AI models and process data, while “institutions” refers to states’ domestic AI research labs and governmental and defense organizations capable of effectively adopting AI systems. The book hedges on exactly which forms AI will take as technology continues to develop and proliferate. It simultaneously assesses states’ willingness to adopt the technology in reference to these four components. “As AI evolves, the relative value of the significance of these inputs could advantage some actors and disadvantage others, further altering the global balance of AI power.” Far from a predetermined landscape, “governments have choices in how they respond to the AI revolution.”

Global AI competition

Scharre does not consider the implications of his framework for the Middle East and North Africa (MENA) region in detail. The book, in many ways, is an invitation to open up regional discussions on AI adoption and relative national power, so long as one considers the technological elephants in the room: the United States and China.

Scharre homes in on the U.S.-China relationship, its deterioration, and AI’s role as a focal point for both Washington and Beijing in achieving national superiority. He views the rush to outcompete one another in basic AI research and development, while not a perfect analogy to the U.S.-Soviet space race, as similar: pursuing both is believed to lead to knock-on economic and national security effects. Scharre’s U.S.-China focus thus appears to have two primary underpinnings: First, the idea that the “U.S.-China relationship will have profound consequences for the future of global AI research, talent flows, supply chains, and cooperation.” Second, Scharre’s own trip to China and limited access to figures, including iFLYTEK’s vice president Lin Ji, in combination with external research on the country’s AI ambitions.

Four Battlegrounds thus does not devote extended attention to the framework’s implications for the MENA region. But because AI’s four components are taken to vary in relative importance as AI matures over time, and because states have varying access to these four components, as well as a range of intentional choices for harnessing them, Scharre’s framework is global in scope. Indeed, one of his more confident predictions about the future of AI in geopolitics is “a mixed outcome in which the cost of basic research continues to rise … but research breakthroughs quickly proliferate to actors with fewer resources.”

Divisions in technological capacity and available resources between MENA states are substantial, but already the lens through which Four Battlegrounds presents AI is converging with regional developments. U.S.-China technological decoupling is an unavoidable risk for MENA states, triggering, as Mohammed Soliman observes, “a growing digital divide” between a subregion of Gulf states, North African economies, and the Levant “that exacerbates the challenges of finding a digitally capable workforce.” Indeed, even within Gulf Cooperation Council (GCC) states, a recent McKinsey survey of senior executives, board directors, and industry experts found that “organization and talent,” broadly construed, is the greatest challenge to commercial AI adoption. MENA states are nonetheless, as Soliman says, “pursuing policies designed to build up the region’s digital and tech sovereignty,” echoing the emphasis on agency that runs throughout Four Battlegrounds.

Falcon: A case study of Four Battlegrounds

Recent AI developments in the United Arab Emirates have already manifested the clever use of AI’s four components: the “Falcon-40B” foundation model — a Large Language Model (LLM) — was not only indigenously produced by the Abu Dhabi-based Technology Innovation Institute (TII), but it has since been made open-source for research and commercial uses. TII moved quickly to train Falcon-40B on Amazon SageMaker, a machine learning hub within Amazon Web Services, allowing widespread consumer deployment of the model. The move came after Falcon-40B topped AI company Hugging Face’s Open LLM Leaderboard in June 2023, beating out previously leading open-source models like Meta’s LLaMA, while its smaller counterpart, Falcon-7B, is top in its weight class (note that some state-of-the-art models, like OpenAI’s GPT-4, are closed-source).

The details will be spared here, but Falcon-40B’s design gives some clues about what TII was planning: Falcon-40B is “English-Centric” while also capable of producing text in German, Spanish, French, Italian, Portuguese, Polish, Dutch, Romanian, Czech, and Swedish. Its developers borrowed from some fundamental features of GPT-3 and machine learning generally (insofar as the quality of its training data — an underappreciated but established aspect of the field — was a priority), while also making modifications that improve its performance and lower computational burdens. The open-source Falcon model was also not fine-tuned for any specific use case, allowing TII to put out a global Call for Proposals to experiment with Falcon-40B’s capabilities in novel use cases across industries, granting those accepted with access to training compute power.

These choices are as commercial as they are geopolitical. They represent intentional design choices that link up with the national ambitions of the UAE, and not coincidentally. It is unlikely that TII expected Falcon to top Hugging Face’s Leaderboard but consider the broader context here: using the comparatively limited compute and talent available to TII, they chose to build a model in the same family as OpenAI’s GPT-3 while making the conscious decision to fully open-source the model, rather unlike OpenAI’s decision to keep its most advanced systems’ technical details secret. Falcon may be considered an early MENA case study in the manipulation of AI’s four battlegrounds within a broader geopolitical context.

Other MENA states, like neighboring Oman, are taking notice of the economic knock-on effects of AI adoption, unveiling a new national economic initiative to facilitate AI adoption by governmental institutions and to increase science and technology investment opportunities. The recent Gulf Information Technology Exhibition (GITEX) hosted in Marrakech, furthermore, highlighted an enthusiasm about AI for the African continent’s development and the improvement of human labor. Such enthusiasm comes as U.S.-based technology multinationals — including IBM and Google — begin modestly investing in the continent’s AI potential, seeking to link technological innovation in public health and medicine with a burgeoning, tech-savvy youth demographic. Beneath it all, data, compute, talent, and institutions shape the trajectory of AI and its impact on relative national power, and constituent states in these regions are exploring new avenues for exploiting them.

None of this is to exaggerate the hand that open-source AI is dealing to tech giants like Google, Microsoft, and Open AI. Indeed, the proliferation of LLMs and the specific way in which Falcon was released are consistent with Scharre’s most confident possibility for AI: that leading technology companies will retain their edge in basic research, but their breakthroughs will not remain entirely their own for long. In this way, some MENA states can already act to leverage AI’s four components to their national advantage.

Too much hedging?

If there is any general criticism that can be leveled against the framework in Four Battlegrounds, it is that it allows Scharre to hedge a bit too much on the technical future of AI and its effects on relative national power. He is, to put it bluntly, too polite. Scharre is sensitive to overinflating any one aspect of AI — where one AI success is described, its mirrored failure is also discussed. The reader is reminded at appropriate intervals that machine learning-based systems are fundamentally limited, constrained to the types of data they are trained on and narrowly applicable, are generally brittle, and lack after-the-fact explainability. He believes that “any predictions” regarding AI’s maturation “should be taken with a grain of salt,” going so far as to suggest that AI can either “peter out” or “continue to mature.”

This is a welcome diversion from much of the AI discourse today. But Scharre hedges so much on AI’s future that his stated possibilities for the technology begin to rub uncomfortably against his own thesis: that states have agency in how they deal with AI’s four key components. The technical matters on which he hedges are those that he recognizes to be of direct geopolitical interest, whose differences amount to dramatically different trajectories for AI and, thus, national power. This in itself is not a terrible thing because Scharre significantly avoids a common technique of think-tank commentary on emerging technologies of “[coalescing] around familiar and intuitive analytic frames and policy ideas,” rushing into the latter without serious consideration of the former. But it is the way he hedges on the trajectory of basic research in AI — almost detached from states’ intentions, by the end — that is problematic.

States increasingly see the indigenous development of LLMs, in geopolitics and strategy researcher Paul Fraioli’s words, “as a point of national pride.” And witnessing the almost urgent efforts within some MENA states, like the UAE and Saudi Arabia, to digitally transform within shifting geopolitical dynamics is to recognize that such states are not “passive participants” in the age of AI, but attentive, forward-looking actors embracing technological initiatives for the sake of national development, a point consistent with Four Battlegrounds’ core thesis.

But LLMs and other generative AIs are not everything, and they still face serious technical challenges in reducing “hallucinations” for their integration into enterprise systems. If state-of-the-art generative AI systems cannot make verifiable, transparent progress on several fundamental shortcomings, then such systems may become solutions in search of commercial problems. Military applications are a different ballgame — and the Russo-Ukraine war is rightly described by my colleagues Robin Fontes and Jorrit Kamminga as an “AI laboratory” — but experimentation does not occur in a vacuum.

Interestingly, Scharre notes that these shortcomings could remain despite systems becoming more powerful. More powerful systems are described in close connection with increasing sizes of AI models, the datasets on which they are trained, and the compute needed to research and build state-of-the-art systems. Models trained on multiple types of data, including images and video, may eventually “associate concepts represented in multiple formats.” But Scharre hedges, warning that even with new advancements come new vulnerabilities. The levels of compute and equipment needed, furthermore, is potentially unsustainable for private AI labs, possibly shifting the burden to governments keen on harnessing AI.

My sense is that Scharre personally believes that the proof of the utility of ever-larger models is already here, “yielding more impressive fundamental breakthroughs than other methods.” He envisions a possible future in which computing-intensive research is a “strategic asset,” pitting U.S. companies’ roles in advanced hardware and equipment manufacturing against those of Chinese firms. But he appears to settle on a final possibility in which state-of-the-art AI systems remain the purview of “the most capable actors” for only a short period before being replicated by those with fewer resources due to a “combination of open publication and improved computational efficiency through hardware and algorithmic improvements.”

A future in which AI continues to suffer from these limitations would be strange and appears to contradict the thesis of the book: that states have agency in how they deal with AI. But state-of-the-art AI systems today — including, for example, prominent areas like language modeling (supporting chatbots) and computer vision (supporting self-driving vehicles) — frequently fail to provide “performance guarantees” in high-stakes use-cases, where such failures cannot be ignored. Like Scharre, I do not know exactly what AI will look like in the future. But, for the commercial or military deployment of AI systems, especially for high-stakes medical, financial, or defense purposes, it is clear that they must be improved to this end. This necessity should inform states’ relationships with AI’s four key components.

The four components of AI vary in importance not just in terms of how state-of-the-art AI systems are built over time, but also in terms of how incentivized states are to innovate their way beyond them. It is conceivable that, because AI can be applied to so many different commercial applications, and because some applications are more error-tolerant than others, the world’s largest economies can afford to tolerate current trends. The U.S.’s and China’s relative access to AI’s four components may give them more — but not totalizing — incentives to largely tolerate the paths they are currently on.

States with fewer resources are a different story. Those that lack access to enormous volumes of the right kinds of datasets, for example, will not be able to overinvest in massive systems for only limited, error-prone returns. States that do have such access may nonetheless lack the proper talent to engineer the data or computing hardware to train the models and process that data. But the “data,” “talent,” and “compute” factors do not pre-determine states’ intentions. States lacking in any one area may still seek to build competitive AI systems through different means. Water-strapped MENA states, for example, may not be able to tolerate the water-cooling needs of systems like ChatGPT indefinitely (which, over millions of uses, appears significant). But this does not mean that MENA states will simply accept the medium- or long-term trajectories set for AI by companies based in the U.S. or China.

Conclusion

Four Battlegrounds successfully approaches an enormously complex topic with an appreciation for the many pitfalls along the way. Scharre’s work serves as an excellent invitation for both regional and interdisciplinary conversations on the role of AI in the future of power and warfare, with immediate implications for MENA states actively working to digitally transform. I recommend Four Battlegrounds for those interested in an accessible breakdown of modern AI’s technical and material underpinnings, its diffusion across the international system and effects on relative national power, and a litany of often-personalized accounts of how efforts to weaponize AI for harmful ends within and across societies are being met with urgent countermeasures. The global scope of the book, combined with Scharre’s sensitivity to the shortcomings and dangers of existing AI systems, makes it a valuable contribution to understanding the dynamics that increasingly define the geopolitical landscape.

Vincent J. Carchidi is a Non-Resident Scholar with MEI’s Strategic Technologies and Cyber Security Program and an Analyst at RAIN Defense+AI. His work focuses on emerging technologies, defense, and international affairs.

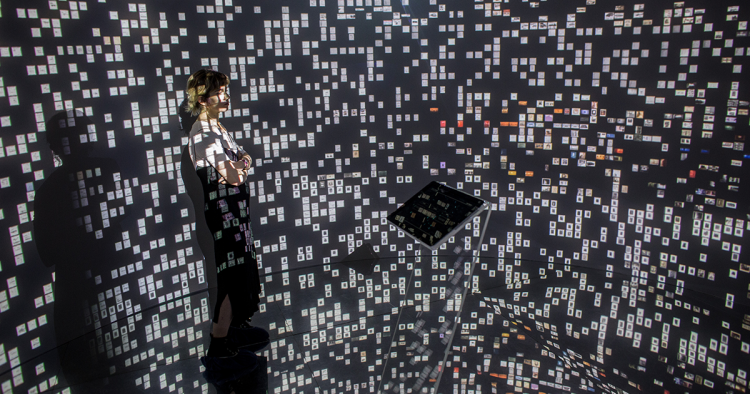

Photo by Chris McGrath/Getty Images

The Middle East Institute (MEI) is an independent, non-partisan, non-for-profit, educational organization. It does not engage in advocacy and its scholars’ opinions are their own. MEI welcomes financial donations, but retains sole editorial control over its work and its publications reflect only the authors’ views. For a listing of MEI donors, please click here.